Readme

Intelligent image scaling to 4x resolution.

Examples

| Input | Output |

|---|---|

|

|

|

|

|

|

Usage

Given that you have a folder of low-resolution images in the folder ./input, the following command saves high-resolution results to the folder ./output.

Run on GPU

This model requires an NVIDIA GPU, compatible with CUDA 11.0.

docker run -it --rm --gpus all \

-v $PWD/input:/code/LR \

-v $PWD/output:/code/sr_results \

us-docker.pkg.dev/replicate/raoumer/isrrescnet:gpu

Abstract

Deep convolutional neural networks (CNNs) have recently achieved great success for single image super-resolution (SISR) task due to their powerful feature representation capabilities. The most recent deep learning based SISR methods focus on designing deeper / wider models to learn the non-linear mapping between low-resolution (LR) inputs and high-resolution (HR) outputs. These existing SR methods do not take into account the image observation (physical) model and thus require a large number of network’s trainable parameters with a great volume of training data. To address these issues, we propose a deep Iterative Super-Resolution Residual Convolutional Network (ISRResCNet) that exploits the powerful image regularization and large-scale optimization techniques by training the deep network in an iterative manner with a residual learning approach. Extensive experimental results on various super-resolution benchmarks demonstrate that our method with a few trainable parameters improves the results for different scaling factors in comparison with the state-of-art methods.

Video presentation

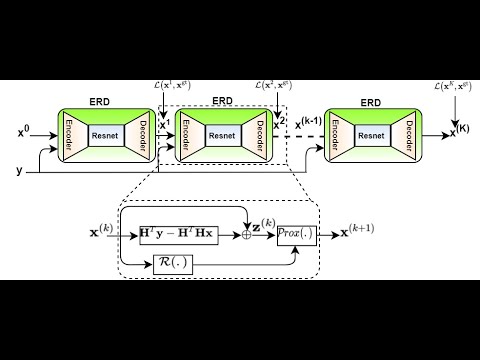

ISRResCNet Architecture

Overall Representative diagram

ERD block

Quantitative Results

Average PSNR/SSIM values for scale factors x2, x3, and x4 with the bicubic degradation model. The best performance is shown in red and the second best performance is shown in blue.

Visual Results

Visual comparison of our method with other state-of-the-art methods on the x4 super-resolution over the SR benchmarks. For visual comparison on the benchmarks, you can download our results from the Google Drive: ISRResCNet.

Code Acknowledgement

The training codes is based on ResDNet and deep_demosaick.